Linear regression for data science

Part 1: to be completed at home before the lab

In this lab, you will learn how to handle many variables with regression by using variable selection techniques, shrinkage techniques, and how to tune hyper-parameters for these techniques. This practical has been derived from chapter 6 of ISLR. You can download the student zip including all needed files for practical 4 here.

Note: the completed homework has to be handed in on Black Board and will be graded (pass/fail, counting towards your grade for the individual assignment). The deadline is two hours before the start of your lab. Hand-in should be a PDF or HTML file.

In addition, you will need for loops (see also lab 1), data manipulation techniques from Dplyr, and the caret package (see lab week 3) to create a training, validation and test split for the used dataset. Another package we are going to use is glmnet. For this, you will probably need to install.packages("glmnet") before running the library() functions.

library(ISLR)

library(glmnet)

library(tidyverse)

library(caret)Best subset selection

Our goal is to to predict Salary from the Hitters dataset from the ISLR package. In this at home section, we will do the pre-work for best-subset selection. First, we will prepare a dataframe baseball from the Hitters dataset where you remove the baseball players for which the Salary is missing. Use the following code:

baseball <- Hitters %>% filter(!is.na(Salary))We can check how many baseball players are left using:

nrow(baseball)## [1] 2631. a) Create baseball_train (50%), baseball_valid (30%), and baseball_test (20%) datasets using the createDataPartition() function of the caret package.

# seed() function is used to create random numbers that can be reproduced; it helps creating the same output when a function that contains randomness is called.

set.seed(5462)

# define the training partition

train_index <- createDataPartition(baseball$Salary, p = .5,

list = FALSE,

times = 1)

# split the data using the training partition to obtain training data

baseball_train <- baseball[train_index,]

# remainder of the split is the validation and test data (still) combined

baseball_val_and_test <- baseball[-train_index,]

# split the remaining 50% of the data in a validation and test set

val_index <- createDataPartition(baseball_val_and_test$Salary, p = .6,

list = FALSE,

times = 1)

baseball_valid <- baseball_val_and_test[val_index,]

baseball_test <- baseball_val_and_test[-val_index,]

# Outcome of this section is that the data (100%) is split into:

# training (~50%)

# validation (~30%)

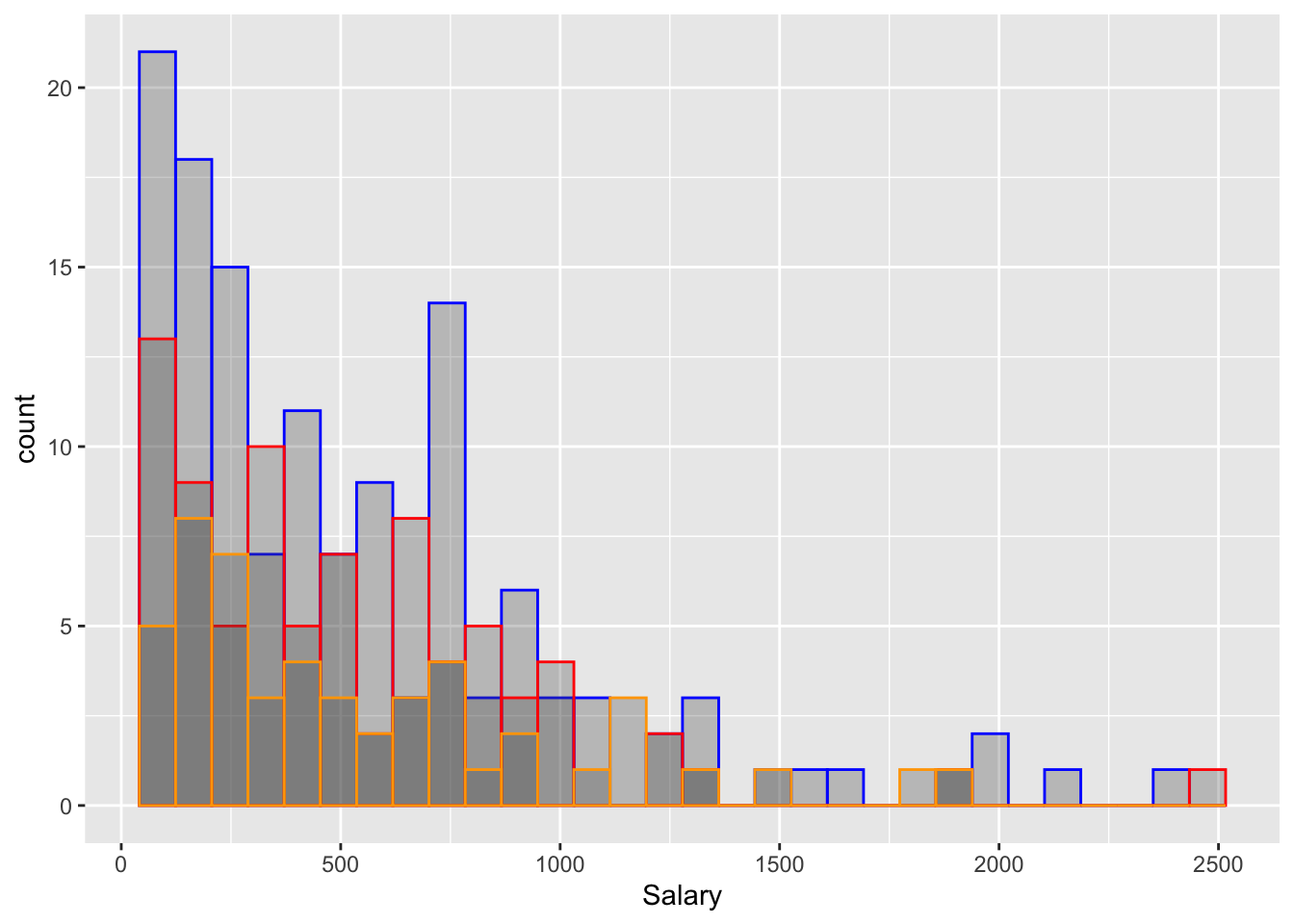

# test (~20%)1. b) Using your knowledge of ggplot from lab 2, plot the salary information of the train, validate and test groups using geom_histogram() or geom_density()

ggplot() +

geom_histogram(data = baseball_train, mapping = aes(x = Salary), alpha = 0.3, colour = "Blue") +

geom_histogram(data = baseball_valid, mapping = aes(x = Salary), alpha = 0.3, colour = "Red") +

geom_histogram(data = baseball_test, mapping = aes(x = Salary), alpha = 0.3, colour = "Orange") ## `stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

## `stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

## `stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

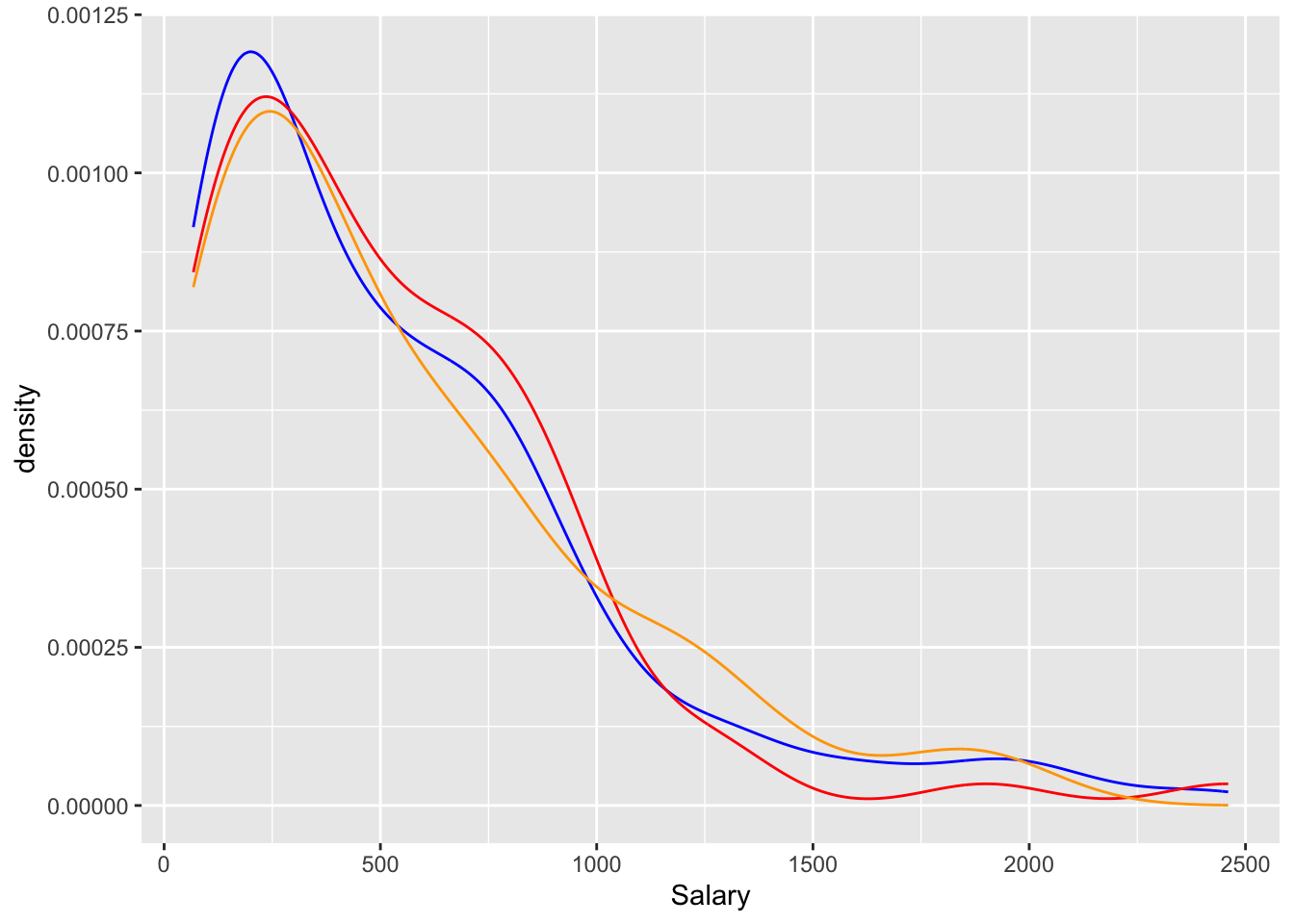

ggplot() +

geom_density(data = baseball_train, mapping = aes(x = Salary), alpha = 0.3, colour = "Blue") +

geom_density(data = baseball_valid, mapping = aes(x = Salary), alpha = 0.3, colour = "Red") +

geom_density(data = baseball_test, mapping = aes(x = Salary), alpha = 0.3, colour = "Orange")

We will use the following function which we called lm_mse() to obtain the mse on the validation dataset for predictions from a linear model:

lm_mse <- function(formula, train_data, valid_data) {

y_name <- as.character(formula)[2]

y_true <- valid_data[[y_name]]

lm_fit <- lm(formula, train_data)

y_pred <- predict(lm_fit, newdata = valid_data)

mean((y_true - y_pred)^2)

}Note that the input consists of (1) a formula, (2) a training dataset, and (3) a test dataset.

2. Try out the function with the formula Salary ~ Hits + Runs, using baseball_train and baseball_valid.

lm_mse(Salary ~ Hits + Runs, baseball_train, baseball_valid)## [1] 156921.4We have pre-programmed a function for you to generate a character vector for all formulas with a set number of p variables. You can load the function into your environment by sourcing the .R file it is written in:

source("generate_formulas.R")You can use it like so:

3. Create a character vector of all predictor variables from the Hitters dataset. colnames() may be of help. Note that Salary is not a predictor variable.

x_vars <- colnames(Hitters)

x_vars <- x_vars[x_vars != "Salary"]4. Using the function generate_formulas() (which is inlcuded in your project folder for lab week 4), generate all formulas with as outcome Salary and 3 predictors from the Hitters data. Assign this to a variable called formulas. There should be 969 elements in this vector.

formulas <- generate_formulas(p = 3, x_vars = x_vars, y_var = "Salary")

length(formulas)## [1] 9695. We will use the following code to find the best set of 3 predictors in the Hitters dataset based on MSE using the baseball_train and baseball_valid datasets. Annotate the following code with comments that explain what each line is doing.

mses <- rep(0, 969)

for (i in 1:969) {

mses[i] <- lm_mse(as.formula(formulas[i]), baseball_train, baseball_valid)

}

best_3_preds <- formulas[which.min(mses)]#When creating the `for loop`, we use the function `as.formula()` from the stats package to loop over all the equations contained in `formulas`. `as.formula()` transforms the characters of the input to a formula, so we can actually use it as a formula in our code.

#To select the best formula with the best MSE, we use the function `which.min()`, which presents the lowest value from the list provided.

# Initialise a vector we will fill with MSE values

mses <- rep(0, 969)

# loop over all the formulas

for (i in 1:969) {

mses[i] <- lm_mse(as.formula(formulas[i]), baseball_train, baseball_valid)

}

# select the formula with the lowest MSE

best_3_preds <- formulas[which.min(mses)]6. Find the best set for 1, 2 and 4 predictors. Now select the best model from the models with the best set of 1, 2, 3, or 4 predictors in terms of its out-of-sample MSE

# Generate formulas

formulas_1 <- generate_formulas(p = 1, x_vars = x_vars, y_var = "Salary")

formulas_2 <- generate_formulas(p = 2, x_vars = x_vars, y_var = "Salary")

formulas_4 <- generate_formulas(p = 4, x_vars = x_vars, y_var = "Salary")

# Initialise a vector we will fill with MSE values

mses_1 <- rep(0, length(formulas_1))

mses_2 <- rep(0, length(formulas_2))

mses_4 <- rep(0, length(formulas_4))

# loop over all the formulas

for (i in 1:length(formulas_1)) {

mses_1[i] <- lm_mse(as.formula(formulas_1[i]), baseball_train, baseball_valid)

}

for (i in 1:length(formulas_2)) {

mses_2[i] <- lm_mse(as.formula(formulas_2[i]), baseball_train, baseball_valid)

}

for (i in 1:length(formulas_4)) {

mses_4[i] <- lm_mse(as.formula(formulas_4[i]), baseball_train, baseball_valid)

}

# Compare mses

min(mses_1)

min(mses_2)

min(mses)

min(mses_4)

# min(mses_4) is lowest of them all!

# So let's see which model that is

formulas_4[which.min(mses_4)]## [1] 163444.9

## [1] 131244.8

## [1] 121754.9

## [1] 115821.2

## [1] "Salary ~ AtBat + Hits + CRBI + Division"Part 2: to be completed during the lab

7. a) Calculate the test MSE for the model with the best number of predictors. You can first train the model with the best predictors on the combination of training and validation set and save it to a variable. Also, you will need a function that calculates the mse.

# Estimate model and calculate mse

lm_best <- lm(Salary ~ CAtBat + CHits + CHmRun + PutOuts, baseball_val_and_test)

mse <- function(y_true, y_pred) mean((y_true - y_pred)^2)

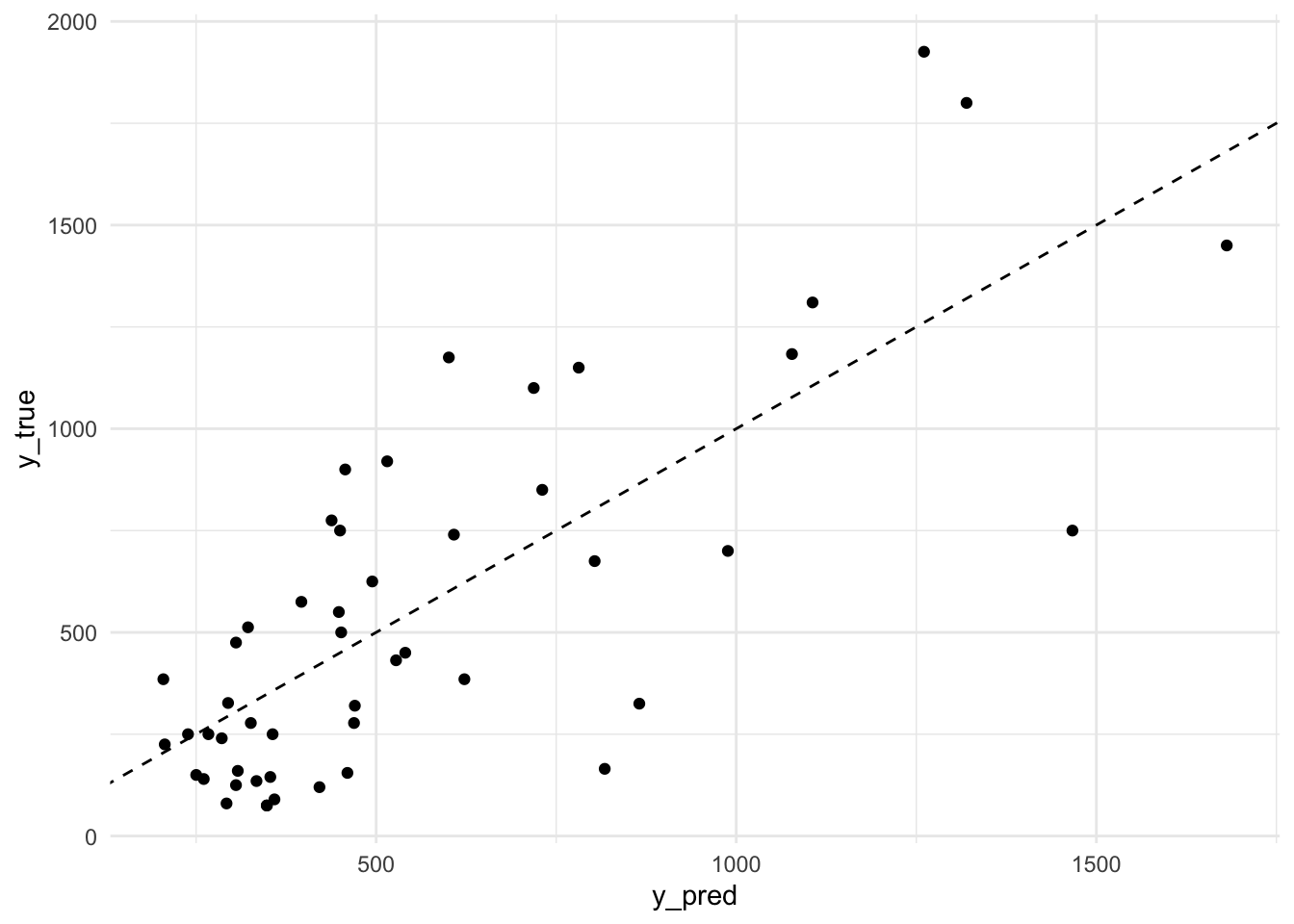

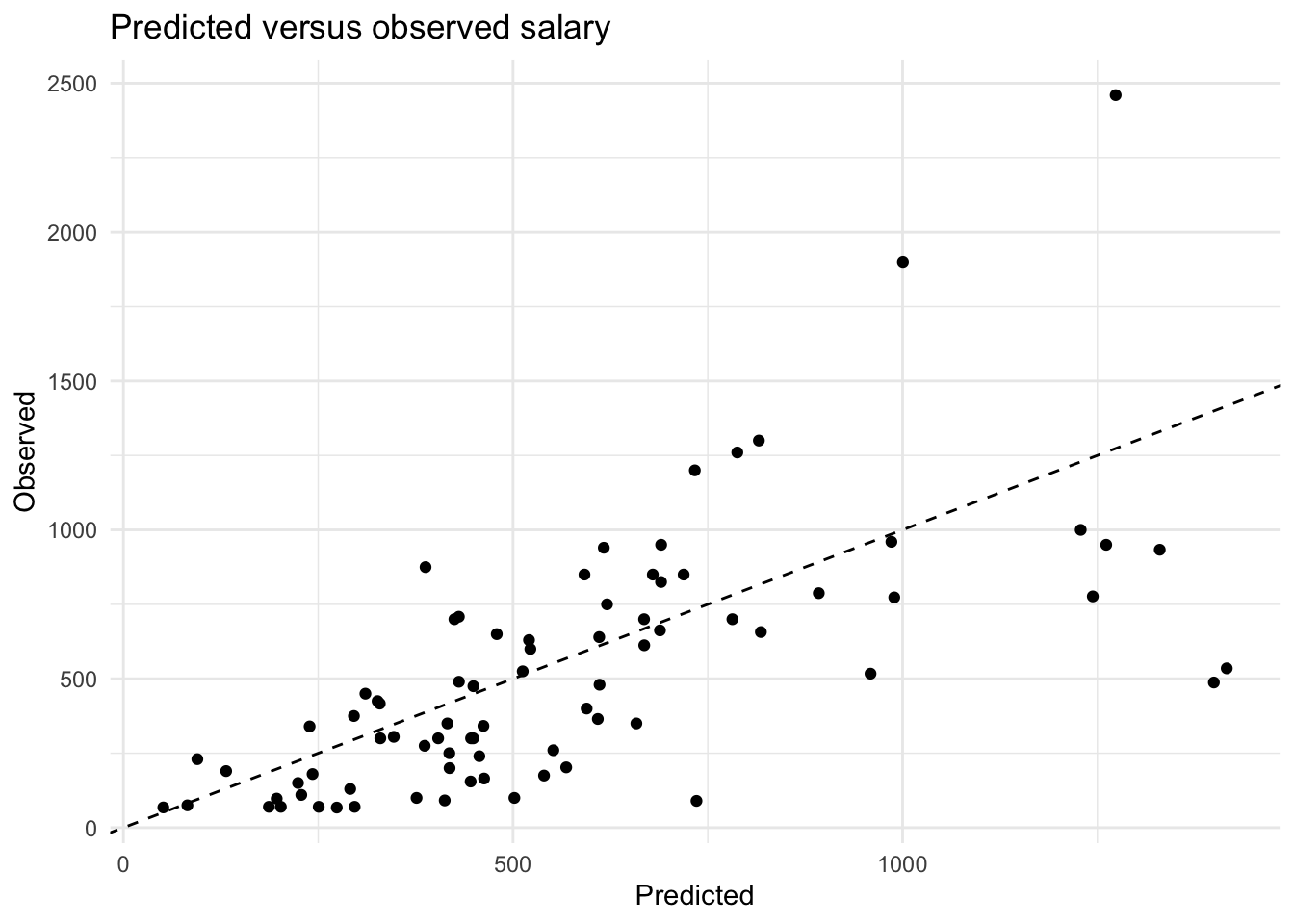

mse(baseball_test$Salary, predict(lm_best, newdata = baseball_test))## [1] 84766.417. b) Using the model with the best number of predictors (the one you created in the previous question), create a plot comparing predicted values (mapped to x position) versus observed values (mapped to y position) of baseball_test.

# create a plot

tibble(

y_true = baseball_test$Salary,

y_pred = predict(lm_best, newdata = baseball_test)

) %>%

ggplot(aes(x = y_pred, y = y_true)) +

geom_abline(slope = 1, intercept = 0, lty = 2) +

geom_point() +

theme_minimal()

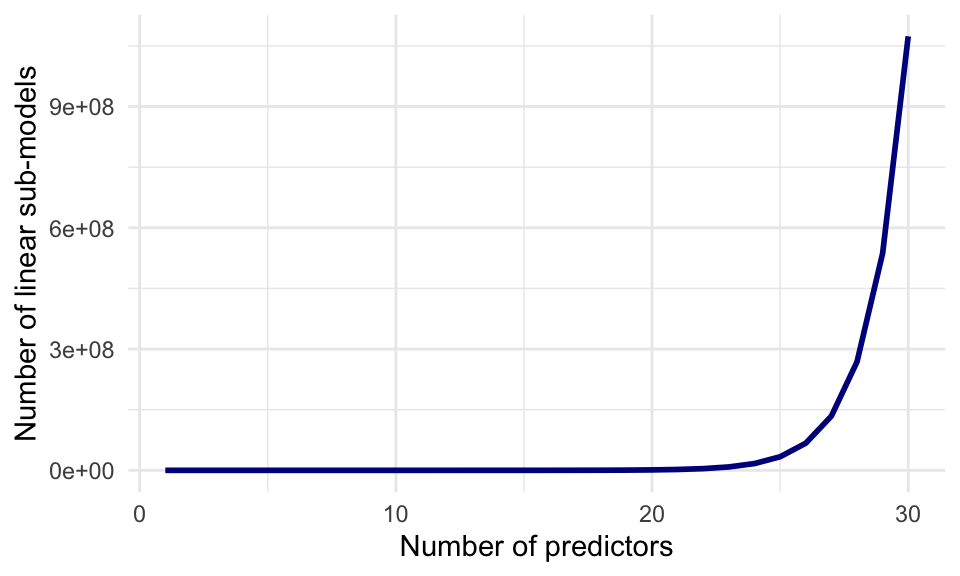

Through enumerating all possibilities, we have selected the best subset of at most 4 non-interacting predictors for the prediction of baseball salaries. This method works well for few predictors, but the computational cost of enumeration increases quickly to the point where it is not feasible to enumerate all combinations of variables:

## Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0.

## ℹ Please use `linewidth` instead.

## This warning is displayed once every 8 hours.

## Call `lifecycle::last_lifecycle_warnings()` to see where this warning was

## generated.

Regularization with glmnet

glmnet is a package that implements efficient (quick!) algorithms for LASSO and ridge regression, among other things.

8. Skim through the help file of glmnet. We are going to perform a linear regression with normal (gaussian) error terms. What format should our data be in?

# We need to input a predictor matrix x and a response (outcome) variable y,

# as well as a family = "gaussian" Again, we will try to predict baseball salary, this time using all the available variables and using the LASSO penalty to perform subset selection. For this, we first need to generate an input matrix.

9. First generate the input matrix using (a variation on) the following code. Remember that the “.” in a formula means “all available variables”. Make sure to check that this x_train looks like what you would expect.

x_train <- model.matrix(Salary ~ ., data = baseball_train)

head(x_train)## (Intercept) AtBat Hits HmRun Runs RBI Walks Years CAtBat CHits

## -Andre Dawson 1 496 141 20 65 78 37 11 5628 1575

## -Alfredo Griffin 1 594 169 4 74 51 35 11 4408 1133

## -Argenis Salazar 1 298 73 0 24 24 7 3 509 108

## -Andy VanSlyke 1 418 113 13 48 61 47 4 1512 392

## -Alan Wiggins 1 239 60 0 30 11 22 6 1941 510

## -Buddy Bell 1 568 158 20 89 75 73 15 8068 2273

## CHmRun CRuns CRBI CWalks LeagueN DivisionW PutOuts Assists

## -Andre Dawson 225 828 838 354 1 0 200 11

## -Alfredo Griffin 19 501 336 194 0 1 282 421

## -Argenis Salazar 0 41 37 12 0 1 121 283

## -Andy VanSlyke 41 205 204 203 1 0 211 11

## -Alan Wiggins 4 309 103 207 0 0 121 151

## -Buddy Bell 177 1045 993 732 1 1 105 290

## Errors NewLeagueN

## -Andre Dawson 3 1

## -Alfredo Griffin 25 0

## -Argenis Salazar 9 0

## -Andy VanSlyke 7 1

## -Alan Wiggins 6 0

## -Buddy Bell 10 1The model.matrix() function takes a dataset and a formula and outputs the predictor matrix where the categorical variables have been correctly transformed into dummy variables, and it adds an intercept. It is used internally by the lm() function as well!

10. Using glmnet(), perform a LASSO regression with the generated x_train as the predictor matrix and Salary as the response variable. Set the lambda parameter of the penalty to 15. NB: Remove the intercept column from the x_matrix – glmnet adds an intercept internally.

result <- glmnet(x = x_train[, -1], # X matrix without intercept

y = baseball_train$Salary, # Salary as response

family = "gaussian", # Normally distributed errors

alpha = 1, # LASSO penalty

lambda = 15) # Penalty value11. The coefficients for the variables are in the beta element of the list generated by the glmnet() function. Which variables have been selected? You may use the coef() function.

rownames(coef(result))[which(coef(result) != 0)]## [1] "(Intercept)" "Hits" "Walks" "CRuns" "CRBI"

## [6] "LeagueN" "DivisionW" "PutOuts" "Errors"12. Now we will create a predicted versus observed plot for the baseball_valid data using the model we just generated. To do this, first generate the input matrix with the baseball_valid data. Then use the predict() function to generate the predictions for the baseball_valid data using the model matrix and the model you created in question 10. Finally, create the predicted versus observed plot for the validation data. What is the MSE on the validation set?

x_valid <- model.matrix(Salary ~ ., data = baseball_valid)[, -1]

y_pred <- as.numeric(predict(result, newx = x_valid))

tibble(Predicted = y_pred, Observed = baseball_valid$Salary) %>%

ggplot(aes(x = Predicted, y = Observed)) +

geom_point() +

geom_abline(slope = 1, intercept = 0, lty = 2) +

theme_minimal() +

labs(title = "Predicted versus observed salary")

mse(baseball_valid$Salary, y_pred)## [1] 100935.7Tuning lambda

Like many methods of analysis, regularized regression has a tuning parameter. In the previous section, we’ve set this parameter to 15. The lambda parameter changes the strength of the shrinkage in glmnet(). Changing the tuning parameter will change the predictions, and thus the MSE. In this section, we will select the tuning parameter based on out-of-sample MSE.

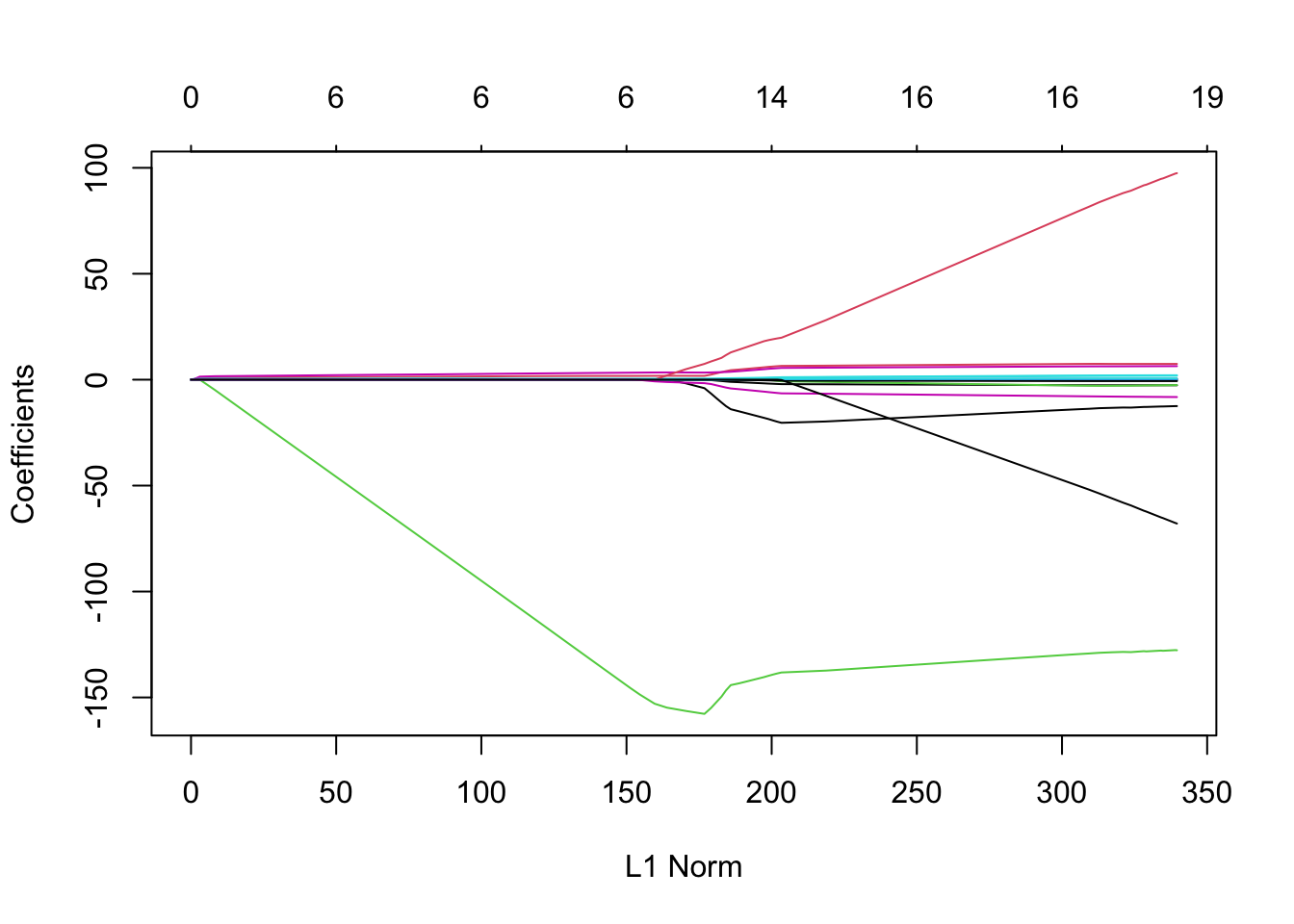

13. a) Fit a LASSO regression model on the same data as before, but now do not enter a specific lambda value. What is different about the object that is generated? Hint: use the coef() and plot() methods on the resulting object.

result_nolambda <- glmnet(x = x_train[, -1], y = baseball_train$Salary,

family = "gaussian", alpha = 1)

# This object contains sets of coefficients for different values of lambda,

# i.e., different models ranging from an intercept-only model (very high

# lambda) to almost no shrinkage (very low lambda).

plot(result_nolambda)

13. b) To help you interpret the obtained plot, Google and explain the qualitative relationship between L1 norm (the maximum allowed sum of coefs) and lambda.

For deciding which value of lambda to choose, we could work similarly to what we have don in the best subset selection section before. However, the glmnet package includes another method for this task: cross validation.

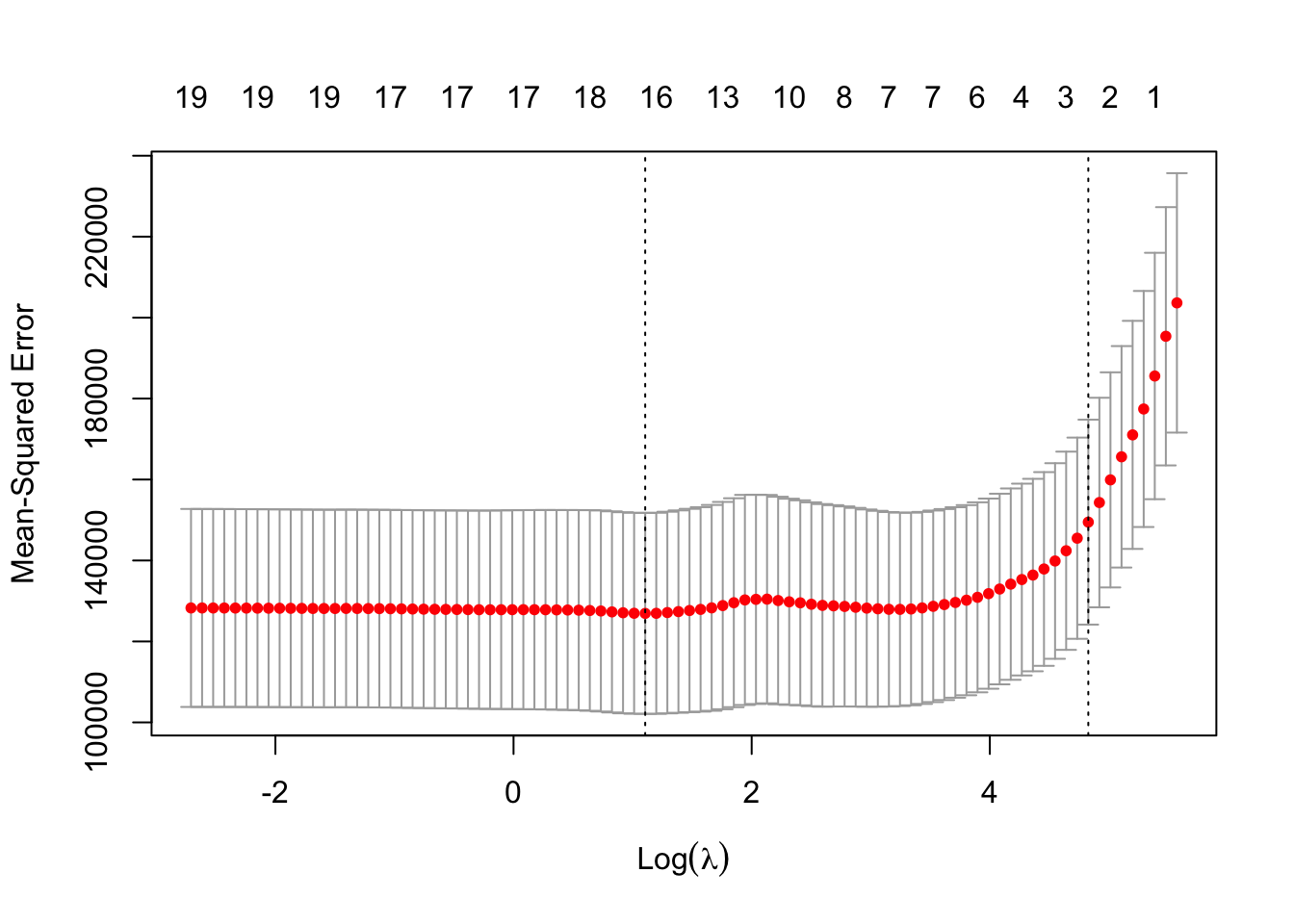

14. Use the cv.glmnet function to determine the lambda value for which the out-of-sample MSE is lowest using 15-fold cross validation. As your dataset, you may use the training and validation sets bound together with bind_rows(). What is the best lambda value?

Note You can remove the first column of the model.matrix object, which contains the intercept, for use in cv.glmnet. In addition, To obtain the best lambda value, you can call the output value lambda.min from the object in which you stored the results of calling cv.glmnet.

x_cv <- model.matrix(Salary ~ ., bind_rows(baseball_train, baseball_valid))[, -1]

result_cv <- cv.glmnet(x = x_cv, y = c(baseball_train$Salary, baseball_valid$Salary), nfolds = 15)

best_lambda <- result_cv$lambda.min

best_lambda## [1] 3.02155615. Try out the plot() method on this object. What do you see? What does this tell you about the bias-variance tradeoff?

plot(result_cv)

# the MSE is high with very small values of lambda (no shrinkage) and

# with very large values of lambda (intercept-only model).

# introducing a bit of bias lowers the variance relatively strongly

# (fewer variables in the model) and therefore the MSE is reduced.It should be noted, that for all these previous exercises they can also be completed using the Ridge Method which is not covered in much depth during this practical session. To learn more about this method please refer back Section 6.2 in the An Introduction to Statistical Learning Textbook.

Comparing methods (optional)

This last exercise is optional. You can also opt to view the answer when made available and try to understand what is happening in the code.

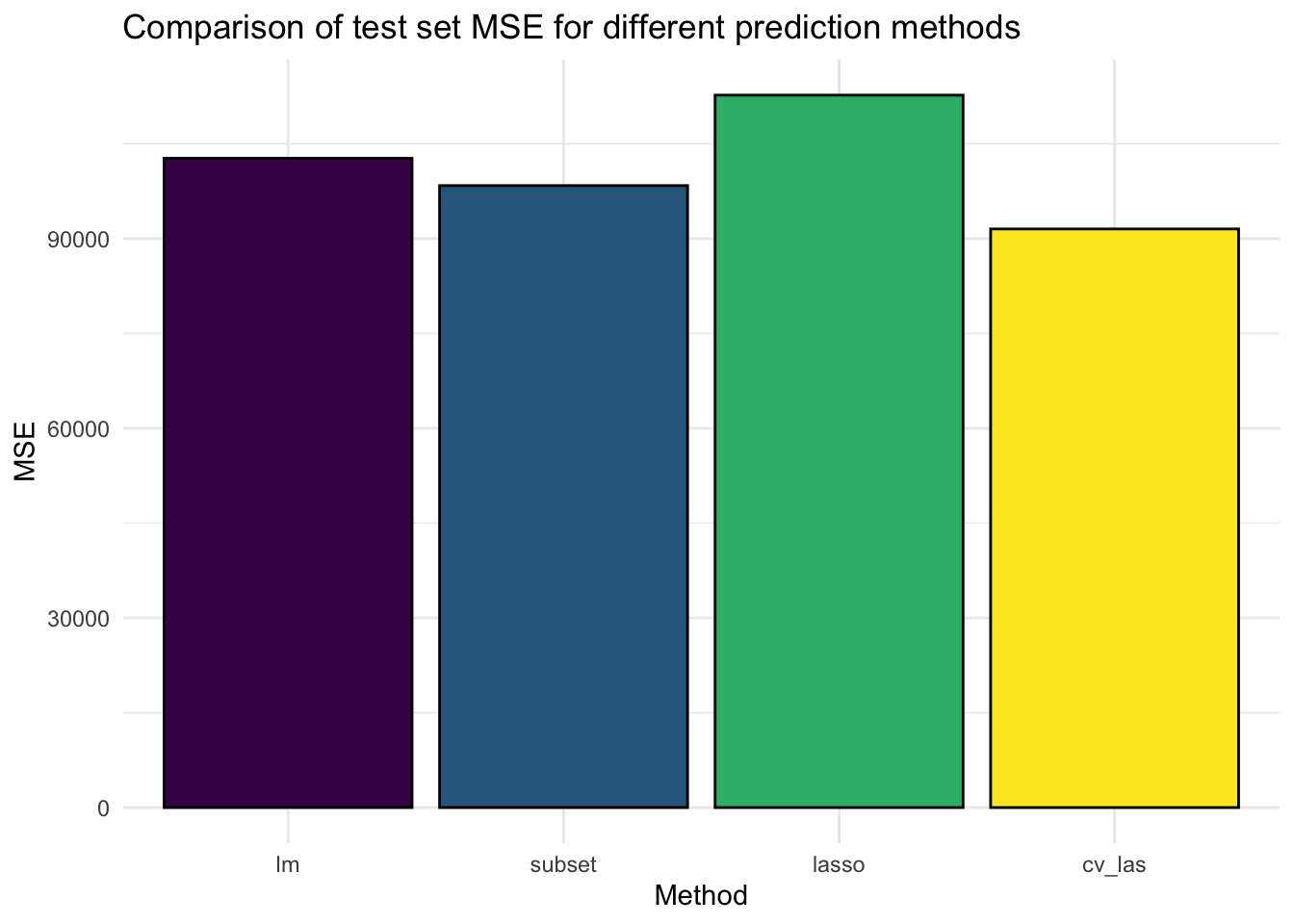

16. Create a bar plot comparing the test set (baseball_test) MSE of (a) linear regression with all variables, (b) the best subset selection regression model we created, (c) LASSO with lambda set to 50, and (d) LASSO with cross-validated lambda. As training dataset, use the rows in both the baseball_train and baseball_valid

# create this new training dataset and the test dataset

train_data <- bind_rows(baseball_train, baseball_valid)

x_test <- model.matrix(Salary ~ ., data = baseball_test)[, -1]

# generate predictions from the models

y_pred_ols <- predict(lm(Salary ~ ., data = train_data), newdata = baseball_test)

y_pred_sub <- predict(lm(Salary ~ Runs + CHits + Division + PutOuts, data = train_data),

newdata = baseball_test)

# these two use x_cv from the previous exercises

y_pred_las <- as.numeric(predict(glmnet(x_cv, train_data$Salary, lambda = 50), newx = x_test))

y_pred_cv <- as.numeric(predict(result_cv, newx = x_test, s = best_lambda))

# Calculate MSEs

mses <- c(

mse(baseball_test$Salary, y_pred_ols),

mse(baseball_test$Salary, y_pred_sub),

mse(baseball_test$Salary, y_pred_las),

mse(baseball_test$Salary, y_pred_cv)

)

# Create a plot

tibble(Method = as_factor(c("lm", "subset", "lasso", "cv_las")), MSE = mses) %>%

ggplot(aes(x = Method, y = MSE, fill = Method)) +

geom_bar(stat = "identity", col = "black") +

theme_minimal() +

theme(legend.position = "none") +

labs(title = "Comparison of test set MSE for different prediction methods") +

scale_fill_viridis_d() # different colour scale